Gathering Experimental Evidence To Improve the Design of Agricultural Programs

- by Lori Lynch, Daniel Hellerstein, Nathaniel Higgins and Steven Wallander

- 8/17/2017

Highlights

- Designing or modifying voluntary agricultural programs involves deciding between many design options; testing the options with economic experiments can be a cost-effective tool for developing evidence about which work best.

- A range of experimental methods, from relatively inexpensive studies in controlled lab environments (often with students) to more expensive studies with targeted populations (such as farmers), can assess the benefits and costs of different design options.

- Randomized experiments conducted in the course of operating a program enable its managers to test the impact of potential changes in a real-world setting, and provide the strongest evidence of impact before implementing changes.

Policymakers considering new programs, or novel ways of delivering program services, often have limited information on how actual or potential participants will react to the changes. Learning how these participants are likely to react is key to program success, because success often depends on the details of how new programs—or changes in existing ones—are implemented.

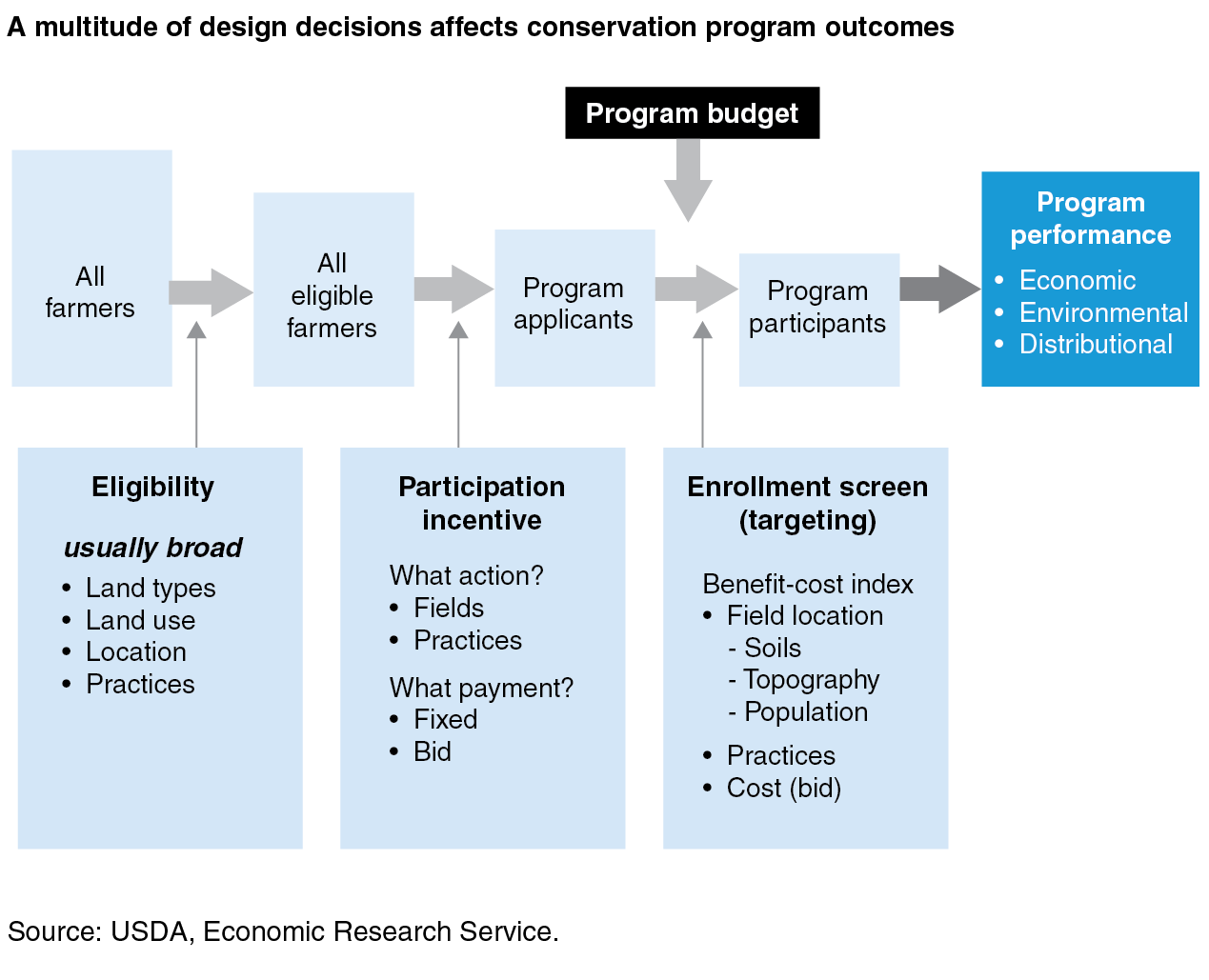

Programs may unintentionally contain barriers that turn participants away or otherwise impact program outcomes. Voluntary conservation programs, for example, contain a number of design options including how eligibility is determined, what payments will be offered for what conservation actions, what type of payments will be made (e.g., fixed-rate payments), and how the program agency will make enrollment decisions. Research from behavioral science shows that design issues—such as burdensome applications or poorly presented choices—can prevent programs from effectively reaching the people they are intended to serve.

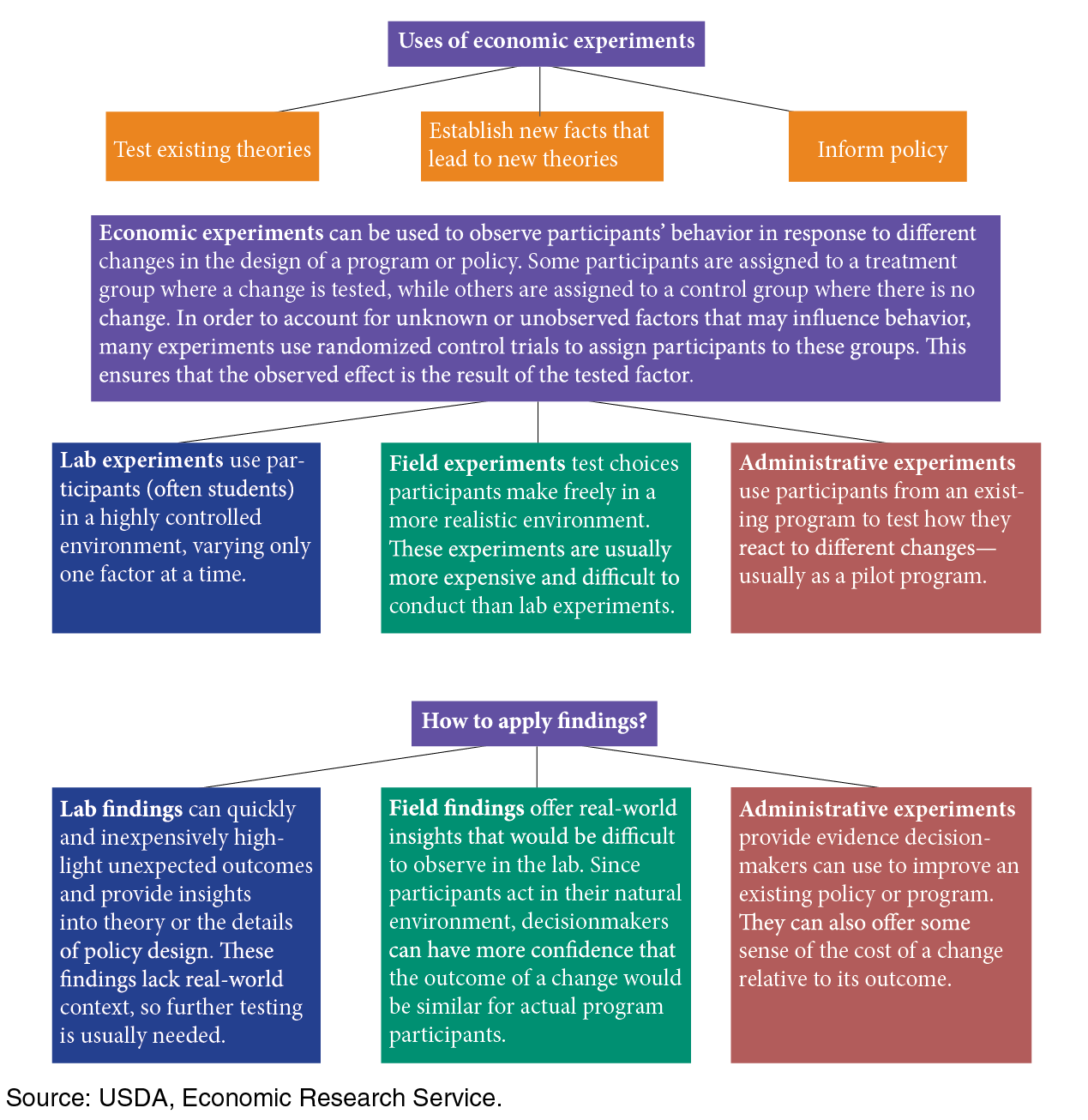

To gather evidence on likely behavioral responses, and thus to improve the odds of success, policymakers can rely on evidence from a variety of methods. Commonly used methods include focus groups, expert interviews, surveys, and pilot programs. Recently, the use of economic experiments is attracting a great deal of attention.

Economic experiments can offer evidence to help inform these design decisions, and they may lead to improvements in existing programs or policies that benefit farmers and others. Economic experiments are similar to other types of experiments that are common to agriculture. However, instead of carefully designing a study to understand what factors lead to successful crop growth (i.e., soil health, precipitation, timing, and temperature), the fundamental scientific concepts of experimentation are applied to measure human response to various program elements (i.e., messaging, incentives, timing, and duration).

For example, economic experiments can test how farmers respond when given the option to receive a lower payment today, or a larger one in 9 months, for implementing new conservation practices. They can also test if bonus payments would encourage neighboring farmers to join a program. These experiments can reveal the potential outcomes and unintended consequences of a proposed change before it is implemented.

Researchers can use several different types of economic experiments to test program design options. Lab experiments typically include students on networked computers, field experiments use participants pulled from the population of interest (such as farmers), and administrative experiments introduce systematic changes to existing programs. Using several examples, we illustrate how these different types of experiments can be used to provide insights into how different features may impact the outcomes of an agricultural program.

Although the settings, number and type of participants, and costs all vary among the different types of experiments, they share several features in common. In particular, they all investigate an outcome of interest by observing responses from different groups of participants. Some participants are presented with a status quo (the control group), and others with a change (the treatment group). In addition, randomness is often used—as when assigning participants to a group—to ensure that unobservable characteristics of the participants are not driving the observed results.

A Lab Experiment With Students: Improving Conservation Programs’ Enrollment Process

Auctions are a cost-effective way to select between offers when more individuals are interested in participating than can be enrolled. For example, the USDA Conservation Reserve Program (CRP) uses an auction to solicit offers to retire environmentally sensitive cropland from many farmland owners. The program will accept a fraction of offers using benefit and cost-effectiveness criteria known as the Environmental Benefits Index. However, auctions can be administered in a variety of ways that can lead to very different outcomes.

In a conservation auction, farmers request a payment rate, a yearly amount that they wish to receive for retiring their land from production and installing a conservation cover. In a simple version of a conservation auction, farmers can request large payments to participate—even larger than the value the farmer would earn from the land if it were farmed. To reduce program costs, program administrators can estimate a maximum payment amount—called a bid cap. This cap limits the size of the annual payments by estimating of how much the farmer would forego by not farming the land.

The estimated caps may be higher or lower than the farmer’s actual loss by not farming the land. However, a challenge with using bid caps is that if the foregone income from farming is underestimated, landowners may be discouraged from submitting offers and participating in the program. In particular, if a bid cap is underestimated for a parcel that has low agricultural productivity (and therefore would be low cost to enroll), the landowner may be unlikely to offer the parcel for enrollment. If this occurs, the program is likely to end up enrolling a parcel with higher agricultural productivity—that happens to have a correctly estimated bid cap—and the program’s overall costs would be higher. In essence, even a bid cap that is, on average, correct may dissuade a fraction of low-cost parcels from being offered to the program, which can lead to higher overall program costs.

To examine alternatives to bid caps, researchers simulated auctions in a computer lab, testing whether quotas on groups of participants with similarly productive parcels would yield more cost-effective outcomes. Using students as decisionmakers, researchers found that the quota caused bidders with lower returns (opportunity costs) to bid less than they did in the standard auctions that did not use quotas. This outcome suggests that quotas could make the auctions more competitive and thus more cost effective.

The experiment allowed the researchers to examine a new auction approach in an inexpensive way. The next step could be running an imitation auction experiment with farmer participants to determine if they would behave similarly to the students. Alternatively, if the experiment with the students had revealed that the quota had not generated lower bids from participants with lower returns, different techniques beside a quota could be investigated.

A Lab Experiment With Farmers: Encouraging Neighbors To Enroll in a Conservation Program

Enrolling lands close to one another (spatially adjacent) can enhance the environmental benefits of some conservation programs. For example, in Oregon, research has shown that one stream with many acres of forest buffers does a better job of lowering water temperature (which benefits salmon) than a similar number of acres spread across many streams. Using a set of experiments, researchers tested alternative mechanisms to achieve greater spatial contiguity in a conservation program and the impact that those options would have on program costs and conservation outcomes. In one experiment, researchers tested whether owners of adjacent land would be more likely to offer land for enrollment if a program were to pay participants a bonus to enroll land near one another.

In this lab experiment, researchers found that an extra payment can lead to more adjacent landowners making bids. However, the experiment illustrated another aspect of the bonuses: paying the extra bonus drained the available budget more quickly, so fewer overall acres could be enrolled.

The experiment also tested an approach for spatial targeting, which gives priority to enrollment offers on adjacent parcels. The experiment found that spatial targeting resulted in higher program benefits because the amount of parcel adjacency increased. Another variation tested outcomes when both spatial targeting and bonuses for neighboring parcels were used. In this case, overall program outcomes, benefits to society, and spatial adjacency were lower than with spatial targeting alone because the bonuses drained the budget more quickly—leading to fewer acres being enrolled.

In short: the experimental results suggest that spatial targeting—favoring parcels that are adjacent to other enrolled parcels—may lead to better conservation outcomes than bonuses or the combination of spatial targeting and bonuses.

A Field Experiment With Farmers: The Value of Upfront Payments Versus Delayed Payments

In many programs, farmers receive annual payments over some period of time. Some programs pay one time upfront payments and others offer landowners the option to do either.

In this experiment, researchers tested farmers’ willingness to take an immediate lower payment, rather than waiting for a larger payment in the future. By mimicking a real program, the experiment placed farmers under conditions similar to what they might face when deciding whether to participate in a conservation program. This type of field experiment is more costly than a controlled laboratory experiment, like those previously discussed, but tests how actual farmers may respond in realistic conditions.

When offered the two different timing options, over 70 percent of the farmers chose to receive an immediate lower payment, rather than a larger payment in the future. By using several different payment choices, researchers were able to estimate farmers’ discount rate for choosing between the current and future payment options. Even at the highest value (corresponding to a 28-percent interest rate), only 10 percent of farmers changed their mind and chose the later payment. While other tradeoffs may result, these results suggest that conservation programs could save money if they made more of their payments upfront. Using the choices farmers made within an experimental setting such as this, program managers could also calculate how much to discount these more immediate payments. Another experiment could test whether upfront payments attract additional farmers to participate.

Administrative Experiments: Using Outreach Letters To Increase Interest in a Conservation Program and To Encourage Voter Participation in Farm Service Agency County Committee Elections

Experimenters can sometimes use a program’s administrative data to measure the effect of a program modification and as such can avoid the burden and expense of additional surveys or focus groups to collect data. For example, attracting new participants and re-enrolling those with expiring contracts can impact the total environmental benefits of conservation programs. ERS researchers tested whether outreach letters would nudge farmers to re-enroll or to enroll for the first time in the CRP.

One hundred thousand eligible farmers were sent both a standard letter and one of several different outreach letters. Some of the farmers received letters with a peer comparison that described the number of CRP participants in their State. Others received letters that also included a statement defining “social norms,” which in this case highlighted how participants who adopted wildlife-friendly covers (such as native grasses) increased their chance of being accepted while also improving water quality—so that “everyone wins.” To take other factors that might impact the farmers’ decision to participate into account, the researchers randomly selected who would receive a given letter and who would not. Because the field experiment occurred within the conservation program itself, the findings should apply to all eligible participants.

The researchers found that farmers with expiring contracts who received the letters made more offers to re-enroll than those who did not. This translated to a $41 printing and mailing cost per additional offer. Results indicated that all of the messaging formats (the basic letter, the peer comparison, and the social norm) were equally effective. This experiment suggests that sending reminder letters to farmers with expiring contracts would increase offers by 500,000 acres over a full 10-year cycle (the CRP currently enrolls about 24 million acres). Having more offers to choose from, for a fixed number of acres to be enrolled, gives program managers more flexibility to enroll those acres likely to best achieve program goals.

However, eligible but unenrolled farmers were not impacted by the letter: Non-participant farmers who received the letters and non-participants who did not receive a letter were equally unlikely to offer to participate in CRP. This group of farmers—those who do not participate in the program—may need a different form of outreach.

In a similar administrative experiment, letters and postcards were sent to all eligible farmers to encourage them to vote in County Committee elections. This study found that a carefully designed outreach effort (with personalized letters and postcards) could increase election participation for less than $29.00 per potential voter.

These examples demonstrate how experiments can be used to generate evidence of costs and benefits from different options for creating and modifying agricultural and conservation programs. Opportunities for experimentation occur frequently within the normal policy process, including:

- When beginning a new program or during a program change

- When an overabundance of people want to participate in a program and the program must determine whom to enroll

- When programs change their goals or the populations they serve

- When designing new ways to increase interest or participation in programs that could yield large net social benefits, such as through increased competition or improved targeting.

Economic experiments can provide another tool to design and improve programs and services within the Federal Government.

This article is drawn from:

- Higgins, N., Hellerstein, D., Wallander, S. & Lynch, L. (2017). Economic Experiments for Policy Analysis and Program Design: A Guide for Agricultural Decisionmakers. U.S. Department of Agriculture, Economic Research Service. ERR-236.

You may also like:

- Weinberg, M. & Claassen, R. (2005, November 1). A Multitude of Design Decisions Influence Conservation Program Performance. Amber Waves, U.S. Department of Agriculture, Economic Research Service.

- Hellerstein, D., Higgins, N. & Roberts, M.J. (2015). Options for Improving Conservation Programs: Insights from Auction Theory and Economic Experiments. U.S. Department of Agriculture, Economic Research Service. ERR-181.

- Cattaneo, A., Claassen, R., Johansson, R. & Weinberg, M. (2005). Flexible Conservation Measures on Working Land. U.S. Department of Agriculture, Economic Research Service. ERR-5.